Hey Embers,

Those of you dabbling in creating 3D compositions of multiple objects may quickly realize the same thing I have - it’s damn hard. Knowing where to place things without a continuous render of how it all looks is both time-consuming and unnecessarily difficult.

While Cinder does have excellent mouse+keyboard support for camera control, positioning multiple objects, possibly animating them to move and rotate is a horse of a different color. To me, Blender seemed to be the obvious choice to get a sense of where I might want to place items in 3D space and then use the values in my Cinder project to create an identical layout.

However - I’m having trouble re-creating the exact same camera that Blender uses, and I’m not sure what’s left to tweak. Allow me demonstrate… Below is my scene in Blender:

The camera in Blender shows:

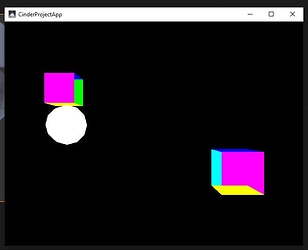

Re-creating the object positions/sizes and camera configuration yields this in Cinder:

As you can see - it’s not completely wrong. The biggest issue is the framing, and I’m not sure what isn’t ‘matching’. I’ve looked into getting the projection matrix out of Blender, but that seems to require a bit of Python I’ve yet to get working. I would have thought the field-of-view is the issue, but I’ve tripled checked that value, and the unit both Cinder and Blender uses. If anyone has any ideas of what to tweak I’m all ears.

For posterity, the setup in cinder is as follows:

void CinderProjectApp::setup()

{

mCube1Pos = { 4.16731, 0.0, -1.85926 };

mCube1Size = {2,2,2};

mCube2Pos = { -6.32747, 4.8883, 3.05238 };

mCube2Size = { 2,2,2 };

mSpherePos = { -4.36681, -0.170805, 0.440636 };

// Blender rendering thing

float fov = 49.1;

ci::vec3 eye = { 0, -12.4907, 0 };

ci::vec3 target = { 0,0,0 };

mBlenderCamera.setFov( fov );

mBlenderCamera.lookAt( eye, target );

}

Thanks in advance,

Gazoo